2 Mathematics

2.1 Algebra

2.1.1 Laws

| Commutative | \[\begin{equation*} a+b = b+a ,\, ab = ba \end{equation*}\] |

| Associative | \[\begin{equation*} a+(b+c) = (a+b)+c\end{equation*}\] |

| Distributive | \[\begin{equation*} a(b+c) = ab+ac\end{equation*}\] |

2.1.2 Identities

| Exponents | Logarithms1 |

|---|---|

| \[\begin{equation*}a^x a^y = a^{x+y}\end{equation*}\] | \[\begin{equation*}\log_b{b} = 1\end{equation*}\] |

| \[\begin{equation*}\left( ab \right) ^x = a^x b^x \end{equation*}\] | \[\begin{equation*}\log_b{1} = 0\end{equation*}\] |

| \[\begin{equation*}\left( a^x \right) y = a^xy \end{equation*}\] | \[\begin{equation*}\log_b \left( MN \right) = log_b{M} + log_b{N}\end{equation*}\] |

| \[\begin{equation*}a^{mn} = \left( a^m \right) ^n \end{equation*}\] | \[\begin{equation*}\log_b \left( \frac{M}{N} \right) = \log_b{M} - \log_b{N}\end{equation*}\] |

| \[\begin{equation*}a^0 = 1\end{equation*}\] If \(a \neq 0\) | \[\begin{equation*}\log_b \left( M^p \right) = p \log_b{M}\end{equation*}\] |

| \[\begin{equation*}a^{-x} = \frac{1}{a^x}\end{equation*}\] | \[\begin{equation*}\log_b \left( \frac{1}{M} \right) = -\log_b{M}\end{equation*}\] |

| \[\begin{equation*}\frac{a^x}{a^y} = a^{x-y}\end{equation*}\] | \[\begin{equation*}\log_b{\sqrt[q]{M}} = \frac{1}{q} \log_b{M}\end{equation*}\] |

| \[\begin{equation*}\sqrt[x]{ab} = \left( \sqrt[x]{a} \right) \left( \sqrt[x]{b} \right)\end{equation*}\] | \[\begin{equation*}\log_b{M} = \left(\log_c{M} \right) \left(\log_b{c} \right)= \frac{\log_c{M}}{\log_c{b}}\end{equation*}\] |

| \[\begin{equation*} a^{\frac{x}{y}} = \sqrt[y]{a^x} = \left( \sqrt[y]{a} \right)^x \end{equation*}\] | |

| \[\begin{equation*} a^{\frac{1}{y}} = \sqrt[y]{a}\end{equation*}\] | |

| \[\begin{equation*}\left( \sqrt[x]{a} \right) \left( \sqrt[y]{a} \right) = a^{\left( \frac{1}{x} + \frac{1}{y} \right)} = \sqrt[xy]{a^{x+y}}\end{equation*}\] | |

| \[\begin{equation*}\sqrt{a} + \sqrt{b} = \sqrt{a + b + 2\sqrt{ab}}\end{equation*}\] |

Examples:

\[\begin{array}{lllll} \log (6.54) = 0.8156 &&&&\\ & \log (6540) &= \log (6.54 \times 10^3 ) &= 0.8156 + 3 &= \fbox{3.8156}\\ & \log ( 0.6540) &= \log (6.54 \times 10^{-1}) &= 0.8156 - 1 &= \fbox{9.8156 - 10}\\ & \log ( 0.000\,6540) &= \log (6.54 \times 10^{-4}) &= 0.8156 - 4 &= \fbox{6.8156 - 10}\\ \end{array}\] \[\begin{array}{lllll} \text{calculate } 68.31 \times 0.2754 &&&&\\ &\log (68.31) &= 1.8354&&\\ &\log ( 0.2754) &= - 0.56&&\\ &1.8354 +(- 0.56) &= 1.2745&&\\ &\log^{-1} (1.2745) &= \fbox{18.81}&&\\ \end{array}\] \[\begin{array}{lllll} \text{calculate } ( 0.6831)^{1.53} &&&&\\ & log ( 0.6831) &= - 0.1655&&\\ & 1.53 \times - 0.1655 &= - 0.253&&\\ & log^{-1} (- 0.253) &= \fbox{ 0.5582}&& \end{array}\] \[\begin{array}{lllll} \text{calculate } ( 0.6831)^{\frac{1}{5}} &&&&\\ & log ( 0.6831) &= - 0.1655&&\\ & \frac{1}{5} \times - 0.1655 &= - 0.0331&&\\ & log^{-1} (- 0.0331) &= \fbox{ 0.9266}&& \end{array}\] \[\begin{array}{lllll} \text{solve for $x$ in $ 0.6931^x = 27.54$ }&&&&\\ & log ( 0.6931^x) &= log (27.54)&&\\ & x log ( 0.6931) &= log (27.54)&&\\ & x &= \frac{log (27.54 )}{log ( 0.6931)}&&\\ & x &= \frac{1.44}{- 0.1655} &= \fbox{-8.701} \end{array}\]2.1.3 Equations

Quadratic Equation

\[\begin{equation*}ax^2 + bx + c =0\end{equation*}\]

Two roots, both real or both complex

\[\begin{equation*}x_{1,2} = \frac{-b \pm \sqrt{b^2 - 4ac}}{2a}\end{equation*}\]

Cubic Equation

\[\begin{equation*}y^3 + py^2 + qy + r = 0\end{equation*}\]

Three roots, all real or one real & two complex

Let \(y = x - \frac{p}{3}\) to rewrite equation in form of \(x^3 + ax + b = 0\)

where \(a = \frac{3q - p^2}{3}\) and \(b = \frac{2p^3 - 9pq - 27r}{27}\)

let

\[\begin{equation*} A = \sqrt[3]{-\frac{b}{2} + \sqrt{\frac{b^2}{4} + \frac{a^3}{27}}}\end{equation*}\]

and

\[\begin{equation*}B =\sqrt[3]{-\frac{b}{2} - \sqrt{\frac{b^2}{4} + \frac{a^3}{27}}}\end{equation*}\]

then

\[\begin{align} x_1 &= A + B\\ x_2 &= \frac{-(A + B)}{2} + \frac{\sqrt{-3}}{2} (A - B)\\ x_3 &= \frac{-(A + B)}{2} - \frac{\sqrt{-3}}{2} (A - B) \end{align}\]

Special cases:

If \(\frac{b^2}{4} + \frac{a^3}{27} < 0\) , then the real roots are

\[\begin{equation*}x_{1,2,3} = 2 \sqrt{\frac{-a}{3}} cos \left( \frac{\phi}{3} + 120° k \right)\end{equation*}\]

where \(k = 0,1,2\)

and

\[\begin{equation*}cos\phi = + \sqrt{\frac{ \frac{b^2}{4} }{ \frac{-a^3}{27} }} \;\;\;\text{if}\; b < 0\end{equation*}\]

or

\[\begin{equation*}cos\phi = - \sqrt{\frac{ \frac{b^2}{4} }{ \frac{-a^3}{27} }} \;\;\;\text{if}\; b > 0\end{equation*}\]

If \(\frac{b^2}{4} + \frac{a^3}{27} > 0\) and \(a > 0\) , the single real root is

\[\begin{equation*}x = 2 \sqrt{\frac{a}{3}} cot \left( 2\phi \right)\end{equation*}\]

where \(tan\phi = \sqrt[3]{tan\psi}\)

and

\[\begin{equation*} cot \left( 2\psi \right) = + \sqrt{\frac{ \frac{b^2}{4} }{ \frac{-a^3}{27} }} \;\;\;\text{if}\; b < 0\end{equation*}\]

or

\[\begin{equation*} cot \left( 2\psi \right) = - \sqrt{\frac{ \frac{b^2}{4} }{ \frac{-a^3}{27} }} \;\;\;\text{if}\; b < 0\end{equation*}\]

If \(\frac{b^2}{4} + \frac{a^3}{27} = 0\), the three real roots are

\[\begin{equation*} x_{1} = -2 \sqrt{\frac{-a}{3}}, \; x_{2,3} = +\sqrt{\frac{-a}{3}} \;\;\; \text{if} \; b > 0 \end{equation*}\]

or

\[\begin{equation*} x_{1} = +2 \sqrt{\frac{-a}{3}}, \; x_{2,3} = -\sqrt{\frac{-a}{3}} \;\;\; \text{if} \; b < 0 \end{equation*}\]

Quartic (biquadratic) Equation

For

\[\begin{equation*}y^4 + py^3 + qy^2 + ry + s = 0\end{equation*}\]

let \(y = x - \frac{p}{4}\) to rewrite equation as

\[\begin{equation*}x^4 + ax^2 + bx + c = 0\end{equation*}\]

let \(l\), \(m\), \(n\) denote roots of the following resolvent cubic:

\[\begin{equation*} t^3 + \frac{1}{2} at^2 + \frac{1}{16} \left( a^2 - 4c \right) t - \frac{1}{64}b^2 = 0 \end{equation*}\]

The roots of the quartic are

\[\begin{equation*} x_{1} = + \sqrt{l} + \sqrt{m} + \sqrt{n}\end{equation*}\] \[\begin{equation*} x_{2} = + \sqrt{l} - \sqrt{m} - \sqrt{n}\end{equation*}\] \[\begin{equation*} x_{3} = - \sqrt{l} + \sqrt{m} - \sqrt{n}\end{equation*}\] \[\begin{equation*} x_{4} = - \sqrt{l} - \sqrt{m} + \sqrt{n}\end{equation*}\]

2.1.4 Interest And Annuities

Amount:

\(P\) principal at \(i\) interest for \(n\) time accumulates to amount \(A_{n}\).

Simple interest:

\[\begin{equation*}A_{n} = P(1 + ni)\end{equation*}\]

at interest compounded each \(n\) interval:

\[\begin{equation*}A_{n} = P(1 + i)^n \end{equation*}\]

at interest compounded \(q\) times per \(n\) interval:

\[\begin{equation*}A_{n} = P(1 + \frac{r}{q})^{nq}\end{equation*}\]

where \(r\) is the nominal (quoted) rate of interest

Effective Interest:

The rate per time period at which interest is earned during each period is called the effective rate \(i\).

\[\begin{equation*}i = \left( 1 + \frac{r}{q} \right)^q -1\end{equation*}\]

Solve above equations for \(P\) to determine investment required now to accumulate to amount \(A_{n}\)

True discount,

\[\begin{equation*}D = A_{n} - P\end{equation*}\]

Annuities:

rent \(R\) is consistent payment at each period \(n\)

let

\[\begin{equation*}s_n \equiv \frac{\left( 1 + i \right)^n - 1}{i}\end{equation*}\]

and let

\[\begin{equation*}r_n \equiv \frac{1 - \left( 1 + i \right)^{-n}}{i}\end{equation*}\]

then \(A_n = R\,s_{n}\)

or

\[\begin{equation*}n = \frac{\log \left( A_n + R \right) - \log R }{\log \left(1 + i \right)}\end{equation*}\]

Present value of an annuity, \(A\) is the sum of the present values of all the future payments. \(A = R\,r_{n}\)

Monthly interest rate = \(\mathrm{MIR} = \frac{ \text{annual interest rate} }{12}\)

Month Term = # months in loan

Monthly payment = \(\left[ \text{amount financed} \right] \left[ \frac{\mathrm{MIR}}{1 - \left( 1 + \mathrm{MIR} \right)^{-\#months}} \right]\)

Final value (\(FV\)) of an investment is a function of the initial principal invested (P), interest rate (\(r\) expressed as \(0.05\) for \(5%\), \(0.1\) for \(10%\), etc.), time invested (\(Y\) typically years), and compounding periods per year (\(n\) typically \(n = 1\) for yearly or \(n = 12\) for monthly).

\[\begin{equation*}FV = P (1 + \frac{r}{n})^{Y_n}\end{equation*}\]

2.2 Geometry

(Burington 1973; Moyer and Ayres 2013 )

General definitions

| Symbol | Definition |

|---|---|

| \(A\) | area |

| \(a\) | side length |

| \(b\) | base length |

| \(C\) | circumference |

| \(D\) | diameter |

| \(h\) | height |

| \(n\) | number of sides |

| \(R\) | radius |

| \(V\) | volume |

| \(x, y, z\) | distances along orthogonal coordinate system |

| \(\beta\) | interior vertex angle |

Triangle

\[\begin{align} A &= \frac{bh}{2}\\ \text{sum of interior angles} &= 180° \end{align}\]

Rectangle

\[\begin{align} A &= bh\\ \text{sum of interior angles} &= 360° \end{align}\]

Parallelogram (opposite sides parallel)

\[\begin{equation*} A = ah = ab \sin \beta \end{equation*}\]

Trapezoid (4 sides, 2 parallel)

\[\begin{equation*} A = \frac{h \left(a + b \right)}{2}\end{equation*}\]

Pentagon, Hexagon, and other \(n\)-sided Polygons

\[\begin{align} A &= \frac{1}{4} n a^2 \cot \left( \frac{180°}{n} \right) \\ R &= \text{radius of circumscribed circle} = \frac{1}{2} a^2 \csc \left( \frac{180°}{n} \right) \\ r &= \text{radius of inscribed circle} = \frac{1}{2} a \cot \left( \frac{180°}{n} \right) \\ \beta &= 180° - \frac{360°}{n} \\ \text{sum of interior angles} &= n 180° - 360° \\ \end{align}\]

Circle

\[\begin{align} A &= \pi R^2 \\ C &= 2 \pi R = \pi D \\ \text{perimeter of n-sided polygon inscribed within a circle} &= 2 n R \sin \left(\frac{\pi}{n} \right) \\ \text{area of circumscribed polygon} &= n R^2 \tan \left( \frac{\pi}{n} \right) \\ \text{area of inscribed polygon} &= \frac{1}{2} n R^2 \sin \left( \frac{2\pi}{n} \right) \\ \text{equation for a circle with center at (h,k):} \\ R^2 &= \left(x-h \right)^2 + \left(y-k \right)^2 \\ \end{align}\]

Ellipse

\[\begin{align} f &= \text{semimajor axis} \\ g &= \text{semiminor axis} \\ e &= \text{eccentricity} = \frac{ \sqrt{f^2 -g^2} }{f} \\ A &= \pi e f \\ \text{equation for ellipse with center at (h,k):} \\ \frac{(x-h)^2 }{f^2} + \frac{(y-k)^2}{g^2} &= 1 \text{ if major axis along x-axis} \\ \text{or } \frac{(y-k)^2 }{f^2} + \frac{(x-h)^2}{g^2} &= 1 \text{ if major axis along y-axis} \\ \text{distance from center to either focus} &= \sqrt{f^2 -g^2} \\ \text{latus rectum} &= \frac{2g^2}{a} \\ \end{align}\]

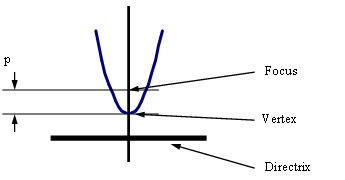

Parabola

\[\begin{align} p &= \text{distance from vertex to focus} \\ e &= \text{eccentricity} = 1 \\ \text{equation for parabola with vertex at (h,k), focus at (h+p,k):} \\ (y-k)^2 = 4j(x-h) \text{ if } j > 0 \\ \text{equation for parabola with vertex at (h,k), focus at (h,k+p):} \\ (x-h)^2 = 4j(y-k) \text{ if } j < 0 \\ \end{align}\]

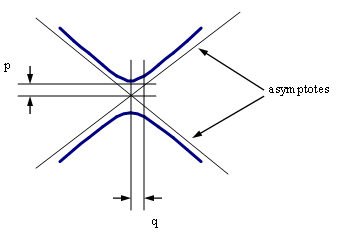

Hyperbola

\[\begin{align} p &= \text{distance between center and vertex} \\ q &= \text{distance between center and conjugate axis} \\ e &= \text{eccentricity} = \frac{ \sqrt{p^2 +q^2} }{p} \\ \text{equation for hyperbola centered at (h, k):} \\ \frac{(x-h)^2}{p^2} - \frac{(y-k)^2}{q^2} &= 1 \text{ if asymptotes slopes} = \pm \frac{q}{p} \\ \text{or } \frac{(y-k)^2}{p^2} - \frac{(x-h)^2}{q^2} &= 1 \text{ if asymptotes slopes} = \pm \frac{p}{q} \\ \end{align}\]

Sphere

\[\begin{align} A &= 4 \pi R^2 \\ V &= \frac{4}{3} \pi R^3 \\ \text{equation for sphere centered at origin:} \\ x^2 + y^2 + z^2 &= R^2 \\ \end{align}\]

Torus

\[\begin{align} \rho &= \text{smaller radius} \\ A &= 4 \pi ^2 R \rho \\ V &= 2 \pi ^2 R \rho^2 \\ \end{align}\]

2.3 Trigonometery

(Burington 1973; Moyer and Ayres 2013 )

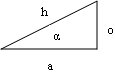

For any right triangle with hypotenuse \(h\), an acute angle \(\alpha\), side length \(o\) opposite from \(\alpha\), and side length \(a\) adjacent to \(\alpha\), the following terms are defined:

\[\begin{align} \text{sine } \alpha &= \sin{\alpha} = \frac{o}{h} \\ \text{cosine } \alpha &= \cos{\alpha} = \frac{a}{h} \\ \text{tangent } \alpha &= \tan{\alpha} = \frac{o}{a} = \frac{\sin{\alpha}}{cos{\alpha}} \\ \text{cotangent } \alpha &= \cot{\alpha} = \text{ctn } \alpha = \frac{a}{o} = \frac{1}{\tan{\alpha}} = \frac{\cos{\alpha}}{\sin{\alpha}} \\ \text{secant } \alpha &= \sec{\alpha} = \frac{h}{a} = \frac{1}{\cos{\alpha}} \\ \text{cosecant } \alpha &= \csc{\alpha} = \frac{h}{o} = \frac{1}{\sin{\alpha}} \\ \text{exsecant } \alpha &= \text{exsec } \alpha = \sec{\alpha} - 1 \\ \text{versine } \alpha &= \text{vers } \alpha = 1 - \cos{\alpha} \\ \text{coversine } \alpha &= \text{covers } \alpha = 1 - \sin{\alpha} \\ \text{haversine } \alpha &= \text{hav } \alpha = \frac{\text{vers } \alpha}{2} \\ \end{align}\]

also defined are the following…

\[\begin{align} \text{hyperbolic sine of } x &= \sinh{x} = \frac{\mathrm{e}^x - \mathrm{e}^{-x}}{2} \\ \text{hyperbolic cosine of } x &= \cosh{x} = \frac{\mathrm{e}^x + \mathrm{e}^{-x}}{2} \\ \text{hyperbolic tangent of } x &= \tanh{x} = \frac{\sinh{x}}{\cosh{x}} = \frac{\mathrm{e}^x - \mathrm{e}^{-x}}{\mathrm{e}^x + \mathrm{e}^{-x}} \\ \text{csch } x &= \frac{1}{\sinh{x}} \\ \text{sech } x &= \frac{1}{\cosh{x}} \\ \text{coth } x &= \frac{1}{\tanh{x}} \\ \end{align}\]

Identities

Pythagorean Identities:

\[\begin{align} \sin^2{\alpha} + \cos^2{\alpha} &= 1 \\ 1 + \tan^2{\alpha} &= \sec^2{\alpha} \\ 1 + \cot^2{\alpha} &= \csc^2{\alpha} \\ \end{align}\]

Half Angle Identities:

\[\begin{align} \sin{\frac{\alpha}{2}} &= \pm \sqrt{\frac{1 - \cos{\alpha}}{2}} \text{ (negative if } \frac{\alpha}{2} \text{ is in quadrant III or IV)}\\ \cos{\frac{\alpha}{2}} &= \pm \sqrt{\frac{1 + \cos{\alpha}}{2}} \text{ (negative if } \frac{\alpha}{2} \text{ is in quadrant II or III)}\\ \tan{\frac{\alpha}{2}} &= \pm \sqrt{\frac{1 - \cos{\alpha}}{1 + \cos{\alpha}}} \text{ (negative if } \frac{\alpha}{2} \text{ is in quadrant II or IV)}\\ \end{align}\]

Double-Angle Identities:

\[\begin{align} \sin{2\alpha} &= 2\sin{\alpha}\cos{\alpha}\\ \cos{2\alpha} &= 2\cos^2{\alpha} - 1 = 1 - 2\sin^2{\alpha} = \cos^2{\alpha} - \sin^2{\alpha}\\ \tan{2\alpha} &= \frac{2\tan{\alpha}}{1 - \tan^2{\alpha}}\\ \end{align}\]

n-Angle Identities:

\[\begin{align} \sin{3\alpha} &= 3\sin{\alpha} - 4\sin^3{\alpha}\\ \cos{3\alpha} &= 4\cos^3{\alpha} - 3\cos{\alpha}\\ \sin{n\alpha} &= 2\sin\big(\left(n-1\right)\alpha\big) \cos{\alpha} - \sin(n-2)\alpha\\ \cos{n\alpha} &= 2\cos\big(\left(n-1\right)\alpha\big) \cos{\alpha} - \cos\left(n-2\right)\alpha\\ \end{align}\]

Two-Angle Identities:

\[\begin{align} \sin\left(\alpha + \beta\right) &= \sin{\alpha}\cos{\beta} + \cos{\alpha}\sin{\beta}\\ \cos\left(\alpha + \beta\right) &= \cos{\alpha}\cos{\beta} - \sin{\alpha}\sin{\beta}\\ \tan\left(\alpha + \beta\right) &= \frac{\tan{\alpha} + \tan{\beta}}{1 - \tan{\alpha}\tan{\beta}}\\ \sin\left(\alpha - \beta\right) &= \sin{\alpha}\cos{\beta} - \cos{\alpha}\sin{\beta}\\ \cos\left(\alpha - \beta\right) &= \cos{\alpha}\cos{\beta} + \sin{\alpha}\sin{\beta}\\ \tan\left(\alpha - \beta\right) &= \frac{\tan{\alpha} - \tan{\beta}}{1 + \tan{\alpha}\tan{\beta}}\\ \end{align}\]

Sum and Difference Identities:

\[\begin{align} \sin{\alpha} + \sin{\beta} &= 2\sin{\frac{\alpha + \beta}{2}}\cos{\frac{\alpha - \beta}{2}}\\ \sin{\alpha} - \sin{\beta} &= 2\cos{\frac{\alpha + \beta}{2}}\sin{\frac{\alpha - \beta}{2}}\\ \cos{\alpha} + \cos{\beta} &= 2\cos{\frac{\alpha + \beta}{2}}\sin{\frac{\alpha - \beta}{2}}\\ \cos{\alpha} - \cos{\beta} &= -2\cos{\frac{\alpha + \beta}{2}}\sin{\frac{\alpha - \beta}{2}}\\ \tan{\alpha} + \tan{\beta} &= \frac{\sin\left(\alpha + \beta\right)}{\cos{\alpha}\cos{\beta}}\\ \cot{\alpha} + \cot{\beta} &= \frac{\sin\left(\alpha + \beta\right)}{\sin{\alpha}\sin{\beta}}\\ \tan{\alpha} - \tan{\beta} &= \frac{\sin\left(\alpha - \beta\right)}{\cos{\alpha}\cos{\beta}}\\ \cot{\alpha} - \cot{\beta} &= -\frac{\sin\left(\alpha - \beta\right)}{\sin{\alpha}\sin{\beta}}\\ \sin^2{\alpha} - \sin^2{\beta} &= \sin\left(\alpha + \beta\right) \sin\left(\alpha - \beta\right)\\ \cos^2{\alpha} - \cos^2{\beta} &= -\sin\left(\alpha + \beta\right) \sin\left(\alpha - \beta\right)\\ \cos^2{\alpha} - \sin^2{\beta} &= \cos\left(\alpha + \beta\right) \cos\left(\alpha - \beta\right)\\ \end{align}\]

Power Identities:

\[\begin{align} \sin{\alpha}\sin{\beta} &= \frac{\cos\left(\alpha - \beta\right) - \cos\left(\alpha + \beta\right)}{2}\\ \cos{\alpha}\cos{\beta} &= \frac{\cos\left(\alpha - \beta\right) + \cos\left(\alpha + \beta\right)}{2}\\ \sin{\alpha}\cos{\beta} &= \frac{\sin\left(\alpha + \beta\right) + \sin\left(\alpha - \beta\right)}{2}\\ \cos{\alpha}\sin{\beta} &= \frac{\sin\left(\alpha + \beta\right) - \sin\left(\alpha - \beta\right)}{2}\\ \tan{\alpha}\cot{\alpha} &= \sin{\alpha}\csc{\alpha} = \cos{\alpha}\sec{\alpha} = 1\\ \sin^2{\alpha} &= \frac{1 - \cos{2\alpha}}{2}\\ \cos^2{\alpha} &= \frac{1 + \cos{2\alpha}}{2}\\ \sin^3{\alpha} &= \frac{3\sin{\alpha} - \sin{3\alpha}}{4}\\ \cos^3{\alpha} &= \frac{3\cos{\alpha} + \cos{3\alpha}}{4}\\ \sin^4{\alpha} &= \frac{3 - 4\cos{2\alpha} + \cos{4\alpha}}{8}\\ \cos^4{\alpha} &= \frac{3 + 4\cos{2\alpha} + \cos{4\alpha}}{8}\\ \sin^5{\alpha} &= \frac{10\sin{\alpha} - 5\sin{3\alpha} + \sin{5\alpha}}{16}\\ \cos^5{\alpha} &= \frac{10\cos{\alpha} + 5\cos{3\alpha} + \cos{5\alpha}}{16}\\ \end{align}\]

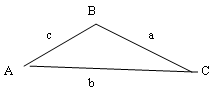

OBLIQUE TRIANGLES

(no right angle, angles A,B,C are opposite of legs a,b,c)

Law of Sines:

\[\begin{equation*}\frac{a}{\sin{A}} = \frac{b}{\sin{B}} = \frac{c}{\sin{C}}\end{equation*}\]

Law of Cosines:

\[\begin{align} a^2 &= b^2 + c^2 - 2bc\cos{A}\\ b^2 &= a^2 + c^2 - 2ac\cos{B}\\ c^2 &= a^2 + b^2 - 2ab\cos{C}\\ \cos{C} &= \frac{a^2 +b^2 -c^2}{2ab}\\ \end{align}\]

Law of Tangents:

\[\begin{equation*}\frac{a-b}{a+b} = \frac{\tan\frac{a-b}{2}}{\tan\frac{a+b}{2}}\end{equation*}\]

Projection Formulas:

\[\begin{align} a &= b\cos{C} + c\cos{B}\\ b &= c\cos{A} + a\cos{C}\\ c &= a\cos{B} + b\cos{A}\\ \end{align}\]

Mollweide’s Check Formulas:

\[\begin{align} \frac{a-b}{c} &= \frac{\sin\frac{A-B}{2}}{\cos\frac{C}{2}}\\ \frac{a+b}{c} &= \frac{\cos\frac{A-B}{2}}{\sin\frac{C}{2}}\\ \end{align}\]

2.4 Matrix Algebra

2.4.1 Fundamentals

Given two matrices, \(A, B\) where \(A\) is an \(i \times m\) matrix and \(B\) is an \(m \times j\) matrix, that is, the numbers of columns of the first matrix equals the number of rows of the second matrix, we define matrix multiplication as follows: \[\begin{equation*}A \times B = A B = C\end{equation*}\] where the entry in the ith row and jth column is given by \(c_{ij}\) where: \[\begin{equation*}c_{ij} = \sum_{k=1}^m (a_{ik}b_{kj}).\end{equation*}\] In other words, we take the dot product of the ith row and the jth column.

For example, the product of a pair of, \(2 \times 2\) matrices is:

\[\begin{equation*} \begin{bmatrix} a_{11} & a_{12} \\ a_{21} & a_{22} \end{bmatrix} \begin{bmatrix} b_{11} & b_{12} \\ b_{21} & b_{22} \end {bmatrix} = \begin{bmatrix} a_{11}b_{11}+a_{12}b_{21} & a_{11}b_{12}+a_{12}b_{22} \\ a_{21}b_{11}+a_{22}b_{21} & a_{21}b_{12}+a_{22}b_{22} \end{bmatrix} \end{equation*}\]

The identity matrix \(I\) occupies the same position in matrix algebra that the value of unity does in ordinary algebra. It is a square matrix consisting of ones on the principle diagonal and zeros everywhere else:

\[\begin{equation*} I = \begin{bmatrix} 1 & 0 & \dots & 0 \\ 0 & 1 & \dots & 0 \\ \vdots & \vdots & \ddots & \vdots\\ 0 & 0 & \dots & 1 \end{bmatrix} \end{equation*}\]

For any matrix \(A\), \(A I = I A = A\).

The inverse matrix \(A^{-1}\), if it exists, is the multiplicative inverse under matrix multiplication. That is, \[\begin{equation*}A A^{-1} = A^{-1}A = I.\end{equation*}\]

2.4.2 Cofactors and Determinants

We denote the determinant of a square matrix by \(|A|\). For a two-by-two matrix, the determinant is as follows:

\[\begin{equation*} \begin{vmatrix} a & b \\ c & d \end{vmatrix} = ad - bc. \end{equation*}\]

Formally, for any \(n \times n\) matrix, the determinant is: \[\begin{equation*}|A| = \sum_{i=1}^{k}a_{ij} C_{ij}\end{equation*}\] where \[\begin{equation*}C_{ij} = (-1)^{i+j}M_{ij}\end{equation*}\] where the minor matrix \(M_{ij}\) is in the \((n-1) \times (n-1)\) matrix resulting when the ith row and jth column are removed from \(A\). This holds when the sum above is taken over any row, \(i\), or column \(j\). The cofactor is \(C_{ij}\) above.

Arbitrarily expanding about the first row of a 3 x 3 matrix gives the determinant:

\[\begin{equation*} |A| = (-1)^{1+1} a_{11} \begin{vmatrix} a_{22} & a_{23} \\ a_{32} & a_{33} \end{vmatrix} + (-1)^{1+2}a_{12} \begin{vmatrix} a_{21} & a_{23} \\ a_{22} & a_{33} \end{vmatrix} + (-1)^{1+3}a_{13} \begin{vmatrix} a_{21} & a_{22} \\ a_{22} & a_{23} \end{vmatrix}. \end{equation*}\]

2.4.3 Computing the Inverse of a Matrix

There is a straightforward (but computationally inefficient and intensive) four-step method for computing the inverse of a matrix \(A\).

Step 1 Compute the determinant of \(A\). If the determinant is zero or does not exist, the matrix is said to be singular and an inverse does not exist.

Step 2 Transpose matrix \(A\), denoted \(A^T\).

Step 3 Replace each element \(a_{ij}\) of the transposed matrix by its cofactor, \(A_{ij}\). The resulting matrix is called the adjoint matrix, \(adj\left[A\right]\).

Step 4 Divide the adjoint matrix by the determinant.

Example. Solve the following set of simultaneous equations.

\[\begin{align} 3x_1 + 2x_2 - 2x_3 &= y_1 \\ -x_1 +x_2 +4x_3 &= y_2 \\ 2x_1 -3x_2 +4x_3 &= y_3 \end{align}\]

We can express this as \(Ax = y\). If the matrix is invertible, then the solution of the system is given by \(x = A^{-1} y\).

Step 1. Compute the determinant: \(|A| = 70\).

Step 2. Transpose the matrix.

\[\begin{equation*} A^T = \begin{bmatrix} 3 & -1 & -2 \\ 2 & 1 & -3 \\ -2 & 4 & 4 \end{bmatrix} \end{equation*}\]

Step 3. Determine the adjoint matrix by replacing each element in \(A^T\) by its Cofactor.

\[\begin{equation*} adj\left[A\right] = \begin{bmatrix} \begin{vmatrix} \hphantom{-}1 & -3 \\ \hphantom{-}4 & \hphantom{-}4 \end{vmatrix} & - \begin{vmatrix} \hphantom{-}2 & -3 \\ -2 & \hphantom{-}4 \end{vmatrix} & \hphantom{-} \begin{vmatrix} \hphantom{-}2 & \hphantom{-}1 \\ -2 & \hphantom{-}4 \end{vmatrix} \\ - \begin{vmatrix} -1 & \hphantom{-}2 \\ \hphantom{-}4 & \hphantom{-}4 \end{vmatrix} & \hphantom{-} \begin{vmatrix} \hphantom{-}3 & \hphantom{-}2 \\ -2 & \hphantom{-}4 \end{vmatrix} & - \begin{vmatrix} \hphantom{-}3 & -1 \\ -2 & \hphantom{-}4\end{vmatrix} \\ \hphantom{-} \begin{vmatrix} -1 & \hphantom{-}2 \\ \hphantom{-}1 & -3 \end{vmatrix} & - \begin{vmatrix} \hphantom{-}3 & \hphantom{-}2 \\ \hphantom{-}2 & -3 \end{vmatrix} & \hphantom{-} \begin{vmatrix} \hphantom{-}3 & -1 \\ \hphantom{-}2 & \hphantom{-}1\end{vmatrix} \\ \end{bmatrix} = \begin{bmatrix} 16 & -2 & 10 \\ 12 & 16 & -10 \\ 1 & 13 & 5 \\ \end{bmatrix} \end{equation*}\]

Step 4. Divide by the determinant.

\[\begin{equation*} \left[ A \right]^{-1} = \frac{1}{70} \begin{bmatrix} 16 & -2 & 10 \\ 12 & 16 & -10 \\ 1 & 13 & 5 \\ \end{bmatrix} \end{equation*}\]

Then, if \(y_1 = 1, y_2 = 13\), and \(y_3 = 8\):

\[\begin{equation*} \begin{bmatrix} x_1 \\ x_2 \\ x_3 \end{bmatrix} = \frac{1}{70} \begin{bmatrix} 16 & -2 & 10 \\ 12 & 16 & -10 \\ 1 & 13 & 5 \end{bmatrix} \begin{bmatrix} 1 \\ 13 \\ 8 \end{bmatrix} \end{equation*}\]

\[\begin{align} x_1 &= \frac{1}{70} \left( 16 - 26 + 80 \right) = \frac{70}{70} &= 1 \\ x_2 &= \frac{1}{70} \left( 12 0 208 - 80 \right) = \frac{140}{70} &= 2 \\ x_3 &= \frac{1}{70} \left( 1 + 169 + 40 \right) = \frac{210}{70} &= 3 \\ \end{align}\]

2.4.4 Cramer’s Rule

Given a matrix, \(A\) and vectors, \(x, b\), we have a system of equations \(A x = b\). If the determinant of the matrix exists, then let \(A_r\) be the matrix obtained from \(A\) by replacing the \(r\)th column with the vector \(b\). Then the system of equations has a unique solution: \[\begin{equation*}x_r = \det(A)/ \det(A_r).\end{equation*}\]

Example of Cramer’s Rule

\[\begin{equation*} \begin{bmatrix} 1 & 0 & 2\\ -3 & 4 & 6 \\ -1 & -2 & 3 \\ \end{bmatrix} \begin{bmatrix} x_1 \\ x_2 \\ x_3 \end{bmatrix} = \begin{bmatrix} 6 \\ 30 \\ 8 \end{bmatrix} \end{equation*}\]

\[\begin{align} A &= \begin{bmatrix} 1 & 0 & 2 \\ -3 & 4 & 6 \\ -1 & -2 & 3 \end{bmatrix} & A_1 = \begin{bmatrix} 6 & 0 & 2 \\ 30 & 4 & 6 \\ 8 & -2 & 3 \end{bmatrix} \\ A_2 &= \begin{bmatrix} 1 & 6 & 2 \\ -3 & 30 & 6 \\ -1 & 8 & 3 \end{bmatrix} & A_3 = \begin{bmatrix} 1 & 0 & 6 \\ -1 & 4 & 30 \\ -3 & -2 & 8 \end{bmatrix} \\ \end{align}\]

\[\begin{align} x_1 &= \frac{\det\left( A_1 \right)}{\det\left( A \right)} = \frac{-40}{44} = \frac{-10}{11} \\ x_2 &= \frac{\det\left( A_2 \right)}{\det\left( A \right)} = \frac{72}{44} = \frac{18}{11} \\ x_3 &= \frac{\det\left( A_3 \right)}{\det\left( A \right)} = \frac{152}{44} = \frac{38}{11} \\ \end{align}\]

2.5 Vector Algebra

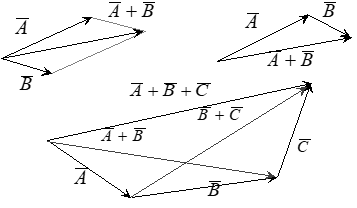

2.5.1 Addition

Given vectors \(\bar{A}\) and \(\bar{B}\), where the \(i\)th component of \(\bar{A}\) is given by \(a_i\), we define addition \(\bar{A} + \bar{B}\) component-wise, such that the \(i\)th component of \(\bar{A} + \bar{B}\) is given by \(a_i + b_i\).

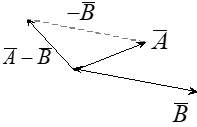

2.5.2 Subtraction

We define vector subtraction just as we defined vector addition above.

2.5.3 Scalar Multiplication

Given a real number, \(m\), and vectors \(\bar{A}\) and \(\bar{B}\), where the ith component of \(\bar{A}\) is given by \(a_i\), we define scalar multiplication \(m \bar{A}\) component-wise, such that the ith component of \(m \bar{A}\) is given by \(m a_i\).

When \(m\) and \(a+i\) are real numbers, the usual properties apply:

Commutative \(m \bar{A} = \bar{A} m\).

Associative \(m (n \bar{A}) = (mn) \bar{A}\).

Distributive \((m + n) \bar{A} = m \bar{A} + n \bar{A}\).

When \(a+i\) are real numbers, we have the following properties that apply to vectors:

Commutative \(\bar{A} + \bar{B} = \bar{B} + \bar{A}\).

Associative \(\bar{A} + (\bar{B} + \bar{C}) = (\bar{A} + \bar{B}) + \bar{C}\).

Distributive \(m (\bar{A} + \bar{B}) = m \bar{A} + m \bar{B}\).

2.5.4 Dot Product

Given vectors \(\bar{A}\) and \(\bar{B}\), where the \(i\)th component of \(\bar{A}\) is given by \(a_i\) and the vectors have the same dimension, \(n\), we define the dot product \(\bar{A} \cdot \bar{B}\) as follows: \[\begin{equation*}\bar{A} \cdot \bar{B} = \sum_{i=1}^{n}a_i b_i .\end{equation*}\] For example, given three-dimensional vectors, we have: \[\begin{equation*}\bar{A} \cdot \bar{B} = a_1 b_1 + a_2 b_2 + a_3 b_3 .\end{equation*}\] The terms dot product, scalar product, and inner product usually mean the same thing.

2.5.5 Norm

The norm or vector norm, denoted \[\begin{equation*}\left| \bar{A} \right|,\end{equation*}\] is the magnitude of the vector. We compute it thus: \[\begin{equation*}\left| \bar{A} \right| = \sqrt{\sum_{i=1}^{n} a_i^2} .\end{equation*}\] For example, given a three-dimensional vector in the \(xyz\) plane, we have: \[\begin{equation*}\left| \bar{A} \right| = \sqrt{a_x^2 + a_y^2 + a_z^2} .\end{equation*}\] The two types of notation, \(| \bar{A} |\) and \(|| \bar{A} ||\) are equivalent.

With this additional notation, we can also define the dot product this way: \[\begin{equation*}\bar{A} \cdot \bar{B} = \left| \bar{A} \right| \left| \bar{B} \right| \cos{\theta} ,\end{equation*}\] where \(\theta\) is the angle between the vectors.

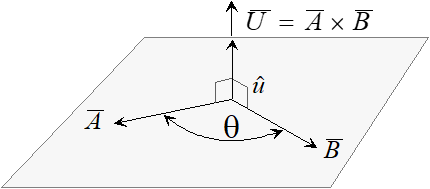

2.5.6 Vector Cross Product

The cross product is an operation on vectors in three dimensional space denoted \(\bar{A} \times \bar{B} .\) We can define it in terms of matrix determinants in the following way.

\[\begin{equation*} \bar{A} \times \bar{B} = \begin{vmatrix} \hat{i} & \hat{j} & \hat{k}\\ a_x & a_y & a_z\\ b_x & b_y & b_z \end{vmatrix}. \end{equation*}\]

Expanding this expression gives us:

\[\begin{equation*} \bar{A} \times \bar{B} = \begin{vmatrix} a_y & a_z\\ b_y & b_z \end{vmatrix} \hat{i} + \begin{vmatrix} a_x & a_z\\ b_x & b_z \end{vmatrix} \hat{j} + \begin{vmatrix} a_x & a_y\\ b_x & b_y \end{vmatrix} \hat{k}. \end{equation*}\]

\[\begin{equation*}\hat{i} \times \hat{i} = \hat{j} \times \hat{j} = \hat{k} \times \hat{k} = 0\end{equation*}\] \[\begin{equation*}\hat{i} \times \hat{j} = \hat{k} \quad \hat{j} \times \hat{k} = \hat{i} \quad \hat{k} \times \hat{i} = \hat{j}\end{equation*}\] \[\begin{equation*}\hat{j} \times \hat{i} = -\hat{k} \quad \hat{k} \times \hat{j} = -\hat{i} \quad \hat{i} \times \hat{k} = -\hat{j}\end{equation*}\]

2.5.7 Vector Differentiation

Let \(\vec{r}(t)\) be a vector-valued function, denoted in this way: \(\vec{r}(t) = (x(t),y(t))\), which is an example of a function that returns a position vector. A similar and equivalent notation is as follows: \(\vec{r}(t) = x(t)\hat{i} + y(t)\hat{j}\).

The first derivative of \(\vec{r}(t)\) with respect to time, \(t\), is a vector-valued function that denotes velocity. This function returns a vector tangential to the trajectory with a magnitude equal to the speed of the particle.

The second derivative of \(\vec{r}(t)\) with respect to time, \(t\), is a vector-valued function that denotes acceleration. This function returns a vector tangential to the velocity vector with a magnitude equal to the acceleration of the particle.

With this notation, the normal differenation operations hold for vector-valued functions along with the following.

Derivative of a Sum \[\begin{equation*}\frac{d}{dt}\left( \vec{r}(t) + \vec{s}(t) \right) = \frac{d}{dt}\vec{r}(t) + \frac{d}{dt}\vec{s}(t).\end{equation*}\]

Derivative of a Dot Product \[\begin{equation*}\frac{d}{dt}\left( \vec{r}(t) \cdot \vec{s}(t) \right) = \vec{r}(t) \cdot \frac{d}{dt}\vec{s}(t) + \frac{d}{dt}\vec{r}(t) \cdot \vec{s}(t).\end{equation*}\]

Derivative of a Cross Product \[\begin{equation*}\frac{d}{dt}\left( \vec{r}(t) \times \vec{s}(t) \right) = \vec{r}(t) \times \frac{d}{dt}\vec{s}(t) + \frac{d}{dt}\vec{r}(t) \times \vec{s}(t).\end{equation*}\]

Derivative of a Scalar Product If \(f(t)\) is a scalar-valued function, then \[\begin{equation*}\frac{d}{dt}\left( f(t)\vec{r}(t) \right) = f(t)\frac{d}{dt}\vec{r}(t) + \left(\frac{d}{dt} f(t)\right) \vec{r}(t).\end{equation*}\]

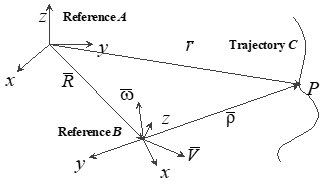

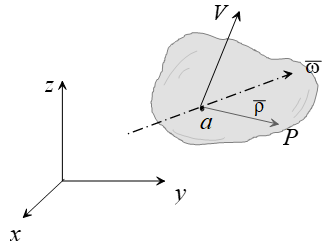

2.5.8 Motion of a point using two reference systems

Reference A can be considered the inertial frame while Rotation of the B reference relative to the A reference must be considered when observing motion with respect to the A reference system.

Note: Unit vectors are along the B system axes. Subscripts denote reference system. Reference B can be equivalent to a maneuvering aircraft.

\[\begin{equation*}\bar{\rho} = x \hat{i} + y \hat{j} + z \hat{k}\end{equation*}\]

\[\begin{equation*}\left( \frac{d \bar{\rho}}{dt} \right)_B = \dot{x} \hat{i} + \dot{y} \hat{j} + \dot{z} \hat{k}\end{equation*}\]

\[\begin{equation*}\left( \frac{d \bar{\rho}}{dt} \right)_A = \left( \dot{x}\hat{i} + \dot{y}\hat{j} + \dot{z}\hat{k} \right) + \left( x \dot{\hat{i}} + y \dot{\hat{j}} + z \dot{\hat{k}} \right)\end{equation*}\]

\[\begin{equation*}\left( \frac{d \bar{\rho}}{dt} \right)_A = \left( \frac{d \bar{\rho}}{dt} \right)_B + \bar{\omega} \times \bar{\rho}\end{equation*}\]

The velocities of the particle \(P\) relative to the \(A\) and to the \(B\) references, respectively, are as follows:

\[\begin{equation*}\bar{V}_A = \left( \frac{d\bar{r}}{dt} \right)_A\end{equation*}\]

\[\begin{equation*}\bar{V}_B = \left( \frac{d\bar{\rho}}{dt} \right)_B\end{equation*}\]

These velocities can be related by noting that \(\bar{r} = \bar{R} + \bar{\rho}\).

Taking the derivative with respect to time for the \(A\) reference: \[\begin{equation*}\bar{V}_A = \left(\frac{d \bar{r}}{dt} \right)_A = \left( \frac{d \bar{R}}{dt} \right)_A + \left( \frac{d \bar{\rho}}{dt} \right)_A\end{equation*}\]

The term \(\left(\frac{d \bar{r}}{dt} \right)_A\) is the velocity of the origin of \(B\) reference relative to the \(A\) reference. We denote \[\begin{equation*}\left(\frac{d \bar{r}}{dt} \right)_A = \dot{\overline{R}}.\end{equation*}\]

Substituting for \(\left( \frac{d \bar{\rho}}{dt} \right)_A\) gives the following: \[\begin{equation*}\bar{V}_A = \dot{\overline{R}} + \bar{V}_B + \bar{\omega} \times \bar{\rho}.\end{equation*}\]

The term is the “transport velocity” and is the only velocity if point P is rigidly attached to reference B.

A similar derivation for acceleration gives the following: \[\begin{equation*}\bar{a}_A = \bar{a}_B + 2(\bar{\omega} \times \bar{V}_B) + \ddot{\overline{R}} + (\dot{\bar{\omega}} \times \bar{\rho}) + \bar{\omega} \times (\bar{\omega} \times \bar{\rho}).\end{equation*}\]

Here \(\bar{\omega} \times (\bar{\omega} \times \bar{\rho})\) is the centripetal acceleration, \(2(\bar{\omega} \times \bar{V}_B)\) is the Coriolis acceleration, and the transport acceleration, which is the only acceleration if point P is rigidly attached to reference B, is given by the following: \[\begin{equation*}\ddot{\overline{R}} + (\dot{\bar{\omega}} \times \bar{\rho}) + \bar{\omega} \times (\bar{\omega} \times \bar{\rho}).\end{equation*}\]

2.5.9 Motion of a point using one reference system

Reference A can be considered the inertial frame while the body can be equivalent to a maneuvering aircraft.

A derivation similar to the previous gives us the following for velocity: \[\begin{equation*}\dot{\bar{\rho}} = \bar{\omega} \times \bar{\rho},\end{equation*}\]

and acceleration: \[\begin{equation*}\bar{a}_b = \bar{a}_a + (\dot{\bar{\omega}} \times \bar{\rho}) + \bar{\omega} \times (\bar{\omega} \times \bar{\rho}).\end{equation*}\]

2.6 Probability and Statistics

Definitions:

Population: The set of all possible observations

Sample: Any subset of a population

Homogeneous Sample: The sample comes from 1 population only

Random Sample: Equal probability of selecting any member of the population

Independence (of events A and B): P(A and B) = P(A)*P(B)

Sample and Population Mean (Average value): \[\begin{equation*}\mu = \bar{x} = \frac{1}{N} \sum_{i=1}^N{x_i}\end{equation*}\]

Mode (Most commonly occurring value in a sample)

Median (middle value, 50th percentile. Half of the sample values are greater and half are smaller)

Deviation: \[\begin{equation*}d_i = x_i - \bar{x}\end{equation*}\]

Population Variance: \[\begin{equation*}\sigma^2 = \frac{1}{N} \sum_{i=1}^N{(x_i - \bar{x}})^2 = \frac{1}{N} \sum_{i=1}^N{d_i^2}\end{equation*}\]

Population Standard Deviation (square root of variance): \[\begin{equation*}\sigma = \sqrt{\frac{1}{N} \sum_{i=1}^N{(x_i - \bar{x}})^2}\end{equation*}\]

Sample Standard Deviation: \[\begin{equation*}s = \sqrt{\frac{1}{N-1} \sum_{i=1}^N{(x_i - \bar{x}})^2}\end{equation*}\]

Discrete Probability Distributions:

Uniform The probability of any outcome in a given set is the same. If there are \(N\) possible outcomes in a set, then the probability of a given outcome is \(p=1/N\). For example, when rolling a fair dice with \(N=6\) sides, the probability that the toss will result in any given side is \(p = 1/6\).

Binomial The probability that the random variable \(X = x\) in \(N\) independent events, each having probability \(p\) of success, and \(1-p\) of failure. \[\begin{equation*}P(X = k) = \binom{N}{k}p^x (1-p)^{N-x}\end{equation*}\] where \[\begin{equation*}\binom{N}{k} = \frac{N!}{k!(N-k)!}.\end{equation*}\]

For example, tossing a fair coin N times where \(p = 1/2\) is the probability of getting a head on any toss. If \(X\) indicates the number of heads in \(N\) tosses, then we have \(P(X = x) = (1/2)^x (1/2)^{N-x}\). For \(N = 4\) we have the following table:

| x | P(X=x) |

|---|---|

| \(0\) | \(1/16\) |

| \(1\) | \(1/4\) |

| \(2\) | \(3/8\) |

| \(3\) | \(1/4\) |

| \(4\) | \(1/16\) |

Continuous Distributions Continuous distributions are defined for all \(x \in [a,b]\), where \(a, b \in \mathbb{R}\) or for all \(x \in (-\infty, \infty)\), that is, the whole real number line. The probability for any single point is zero; that is, \(P(X=x) = 0\). Instead, one must work with probability on an interval, e.g., \(P(0 < x)\) or \(P(a < x < b)\).

The Normal Distribution The probability density function of the Normal Distribution is given by: \[\begin{equation*}f(x) = \frac{1}{\sqrt{2 \pi \sigma}}\mathrm{e}^{\frac{-(x-\mu)^2}{2\sigma^2}}\end{equation*}\]

The Standard Normal Distribution The Standard Normal Distribution is simply the Normal Distribution with \(\mu = 0\) and \(\sigma=1\).

Error Probable An interval centered at the mean that contains one-half of the distribution.

Circular Error Probable A circle centered at the mean, in a bivariate normal distribution, that contains one-half of the distribution.

Confidence Intervals An interval estimate of a statistic.

Central Limit Theorem The sample mean of data sampled from a normally distributed population is itself normally distributed with mean, \(\mu\), and variance, \(\sigma^2/\sqrt{n}\), where the population distribution is \(N(\mu,\sigma^2)\) and \(n\) is the sample size and usually \(n \geq 30\).

Hypothesis Testing Begins with an assumption (hypothesis), usually about the underlying population distribution of some measured quantity or computed error. Select values for the hypothesis and alternate hypothesis(es) that partition the sample space. Collect N samples of the population test statistic or parameter. There are two types of errors: Type 1 errors reject the hypothesis when it is true; Type II accept the hypothesis when it is false.

2.7 Standard Series

A Taylor Series is the expansion of a function about a point, \(a\), given only the value of the function and all of its derivatives at that point. It is used to estimate functions when other analytical techniques will not work. \[\begin{equation*}f(x) = \sum_{i=0}^{\infty} \frac{{f(a)(x-a)^i}}{i!} = f(a) + f'(x-a) + \frac{f''(a)(x-a)^2}{2} + \dots + \frac{f^{(n)}(a)(x-a)^n}{n!} + \dots\end{equation*}\]

The Maclaurin Series is a Taylor Series with \(a = 0\).

The Binomial Series is a Taylor Series with \(f(x)=(a + x)^n\). When n is a natural number, the result is a polynomial as follows: \[\begin{equation*}(a+x)^n = a^n + na^{n-1}x + \frac{n(n-1)a^{n-2}x^2}{2} + \frac{n(n-1)(n-2)a^{n-3}x^3}{3!} + \dots + x^n\end{equation*}\]

The Taylor Series expansion of an Exponential function gives the following: \[\begin{equation*}a^x = 1 + x \ln a + \frac{(x \ln a)^2}{2} + \frac{(x \ln a)^3}{3!} + \dots\end{equation*}\]

In the special case when \(a = e\): \[\begin{equation*}\mathrm{e}^x = 1 + x + \frac{x^2}{2} + \frac{x^3}{3!}+ \dots\end{equation*}\]

Hyperbolic \(\sin\) and \(\cos\) functions are another special case: \[\begin{equation*}\cosh x = \frac{(\mathrm{e}^x + \mathrm{e}^{-x})}{2} = 1 + \frac{x^2}{2} + \frac{x^4}{4!}+ \dots\end{equation*}\] \[\begin{equation*}\sinh x = \frac{(\mathrm{e}^x - \mathrm{e}^{-x})}{2} = x + \frac{x^3}{3!} + \frac{x^5}{5!}+ \dots\end{equation*}\]

The Taylor Series expansion of an Logarithmic function gives the following. When \(0 < x < 2\): \[\begin{equation*}\ln x = (x-1) - \frac{(x-1)^2}{2} + \frac{(x-1)^3}{3} + \dots\end{equation*}\] When \(x > 1/2\): \[\begin{equation*}\ln x = \frac{(x-1)}{x} - \frac{(x-1)^2}{2x^2} + \frac{(x-1)^3}{3x^3} + \dots\end{equation*}\] When \(0 < x\): \[\begin{equation*}\ln x = 2 \left[ \frac{(x-1)}{x+1} - \frac{(x-1)^3}{3(x+1)^3} + \frac{(x-1)^5}{5(x-1)^5} + \dots \right]\end{equation*}\]

The following are Taylor Series expansion of Trigonometric functions: \[\begin{equation*}\sin x = x - \frac{x^3}{3!} + \frac{x^5}{5!} -\frac{x^7}{7!}+ \dots\end{equation*}\] \[\begin{equation*}\cos x = x - \frac{x^2}{2!} + \frac{x^4}{4!} -\frac{x^6}{6!}+ \dots\end{equation*}\] \[\begin{equation*}\tan x = x + \frac{x^3}{3} + \frac{2x^5}{15} + \frac{17x^7}{315}+ \dots \text{ whenever } (x^2 < \pi^2/4)\end{equation*}\] \[\begin{equation*}\sin^{-1} x = x + \frac{1}{2}\frac{x^3}{3} + \frac{1}{2}\frac{3}{4}\frac{x^5}{5} + \frac{1}{2}\frac{3}{4}\frac{5}{6}\frac{x^7}{7!} + \dots \text{for } |x|<1\end{equation*}\] \[\begin{equation*}\cos^{-1} x = \frac{\pi}{2} - \sin^{-1}x \text{ for } |x|<1\end{equation*}\] \[\begin{equation*}\tan^{-1} x = x - \frac{x^3}{3!} + \frac{x^5}{5!} -\frac{x^7}{7!} + \dots\text{ for } |x|<1\end{equation*}\] \[\begin{equation*}\ln(\sin x) = \ln x - \frac{x^2}{6} - \frac{x^4}{180} - \frac{x^6}{2835}- \dots \text{for } x^2 < \pi^2\end{equation*}\] \[\begin{equation*}\ln(\cos x) = - \frac{x^2}{s} - \frac{x^4}{12} - \frac{x^6}{45} - \frac{x^8}{2520} \dots \text{for } x^2 < \frac{\pi^2}{4}\end{equation*}\] \[\begin{equation*}\ln(\tan x) = \ln x + \frac{x^2}{3} - \frac{7x^4}{90} - \frac{62x^6}{2835}+ \dots \text{for } x^2 < \frac{\pi^2}{4}\end{equation*}\] \[\begin{equation*}\mathrm{e}^{\sin x} = 1 + x + \frac{x^2}{2!} - \frac{3x^4}{4!} -\frac{8x^5}{5!} + \frac{3x^2}{6!} + \dots\end{equation*}\] \[\begin{equation*}\mathrm{e}^{\cos x} = e \left(1 - \frac{x^2}{2!} +\frac{4x^2}{4!} - \frac{31x^6}{6!} + \dots \right)\end{equation*}\] \[\begin{equation*}\mathrm{e}^{\tan x} = 1 + x + \frac{x^2}{2!} + \frac{3x^3}{3!} + \frac{9x^4}{4!} + \frac{37x^5}{5!} + \dots \text{for } x^2 < \frac{\pi^2}{4}\end{equation*}\]

2.8 Derivative Table

(Moyer and Ayres 2013; Eshbach 1936)

- \(x\) is the independent variable

- \(u\) and \(v\) are dependent on \(x\)

- \(w\) is dependent on \(u\)

- \(a\) and \(n\) are constants

- \(\log{x}\) is the common logarithm to base 10, \(\log_{10}{x}\)

- \(\ln{x}\) is logarithm to the base \(e\), \(\log_e{x}\)

| Fundamental Derivatives |

| \[\begin{equation*}\frac{da}{dx} = 0\end{equation*}\] |

| \[\begin{equation*}\frac{d\left(ax\right)}{dx} = a\end{equation*}\] |

| \[\begin{equation*}\frac{dx^n}{dx} = nx^{n-1}\end{equation*}\] |

| \[\begin{equation*}\frac{d\left(u + v \right)}{dx} = \frac{du}{dx} + \frac{dv}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{d\left(uv \right)}{dx} = u\frac{dv}{dx} + v\frac{du}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{d\left(\frac{u}{v} \right)}{dx} = \frac{1}{v^2} \left( v\frac{du}{dx} - u\frac{dv}{dx} \right)\end{equation*}\] |

| \[\begin{equation*}\frac{dw}{dx} = \frac{dw}{du}\frac{du}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{du^n}{dx} = nu^{n-1}\frac{du}{dx}\end{equation*}\] |

| Expressions Containing Exponential and Logarithmic Functions |

| \[\begin{equation*}\frac{d \ln{x}}{dx} = \frac{1}{x}\end{equation*}\] |

| \[\begin{equation*}\frac{d \ln{u}}{dx} = \frac{1}{u}\frac{du}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{d \log{u}}{dx} = \frac{\log{e}}{u}\frac{du}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{d\mathrm{e}^x}{dx} = \mathrm{e}^x\end{equation*}\] |

| \[\begin{equation*}\frac{da^x}{dx} = a^x \ln{a}\end{equation*}\] |

| \[\begin{equation*}\frac{da^u}{dx} = a^u \ln{a}\frac{du}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{du^v}{dx} = vu^{v-1}\frac{du}{dx} + u^v \ln{u}\frac{dv}{dx}\end{equation*}\] |

| Expressions Containing Trigonometric Functions |

| \[\begin{equation*}\frac{d \sin{x}}{dx} = \cos{x} \text{ or } \frac{d \sin{u}}{dx} = \cos{u}\frac{du}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{d \cos{x}}{dx} = -\sin{x} \text{ or } \frac{d \cos{u}}{dx} = -\sin{u}\frac{du}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{d \tan{x}}{dx} = \sec^2{x} \text{ or } \frac{d \tan{u}}{dx} = \sec^2{u}\frac{du}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{d \sec{x}}{dx} = \sec{x}\tan{x} \text{ or } \frac{d \sec{u}}{dx} = \sec{u}\tan{u}\frac{du}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{d \cot{x}}{dx} = -\csc^2{x} \text{ or } \frac{d \cot{u}}{dx} = -\csc^2{u}\frac{du}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{d \sin^{-1}{x}}{dx} = \frac{1}{\sqrt{1-x^2}} \text{ or } \frac{d \sin^{-1}{u}}{dx} = \frac{1}{\sqrt{1-u^2}}\frac{du}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{d \cos^{-1}{x}}{dx} = -\frac{1}{\sqrt{1-x^2}} \text{ or } \frac{d \cos^{-1}{u}}{dx} = -\frac{1}{\sqrt{1-u^2}}\frac{du}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{d \tan^{-1}{x}}{dx} = \frac{1}{1+x^2} \text{ or } \frac{d \tan^{-1}{u}}{dx} = \frac{1}{1+u^2}\frac{du}{dx}\end{equation*}\] |

| \[\begin{equation*}\frac{d \cot^{-1}{x}}{dx} = -\frac{1}{1+x^2} \text{ or } \frac{d \cot^{-1}{u}}{dx} = -\frac{1}{1+u^2}\frac{du}{dx}\end{equation*}\] |

2.9 Integral Table

(Moyer and Ayres 2013; Eshbach 1936)

- \(x\) is any variable

- \(u\) is any function of \(x\)

- \(w\) is dependent on \(u\)

- \(a\) and \(b\) are arbitrary constants

- \(C\), the constant of integration, has been omitted from this table, but should be added to the result of every integration

| Fundamental Integrals |

| \[\begin{equation*}\int_{}{} a \, dx = ax\end{equation*}\] |

| \[\begin{equation*}\int_{}{} a \, f\left(x\right) dx = a \int_{}{} f\left(x\right) dx \end{equation*}\] |

| \[\begin{equation*}\int_{}{} \left(u+v\right) dx = \int_{}{} u\,dx + \int_{}{}v\,dx \end{equation*}\] |

| \[\begin{equation*}\int_{}{} u\,dv = uv - \int_{}{}v\,du \end{equation*}\] |

| \[\begin{equation*}\int_{}{} \frac{u\,dv}{dx} dx = uv - \int_{}{} v \frac{du}{dx} dx\end{equation*}\] |

| \[\begin{equation*}\int_{}{} x^n dx = \frac{x^{n+1}}{n+1} \text{, } n \neq -1\end{equation*}\] |

| \[\begin{equation*}\int_{}{} x^{-1} dx = \ln{x} \end{equation*}\] |

| \[\begin{equation*}\int_{}{} w \left( u \right) dx = \int_{}{} w\left( u \right) \frac{dx}{du} u \end{equation*}\] |

| \[\begin{equation*}\int_{}{} \frac{dx}{a^2 + x^2} = \frac{1}{a} \tan^{-1} \frac{x}{a}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \frac{dx}{\sqrt{a^2 - x^2}} = \sin^{-1} \frac{x}{a}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \frac{dx}{\sqrt{a^2 \pm x^2}} = \ln \left( x - \sqrt{a^2 \pm x^2} \right)\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \sqrt{a^2 - u^2} = \frac{1}{2} \left( u \sqrt{a^2 - x^2} + a^2 \sin^{-1} \frac{u}{a} \right)\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \frac{du}{a^2 + u^2} = \frac{1}{a} \tan^{-1} \frac{u}{a} \text{, } a > 0 \end{equation*}\] |

| Expressions Containing Exponential and Logarithmic Functions |

| \[\begin{equation*}\int_{}{} \frac{dx}{x} = \ln{x}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \mathrm{e}^{x} dx = \mathrm{e}^{x}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \mathrm{e}^{ax} dx = \frac{\mathrm{e}^{ax}}{a}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} b^{ax} dx = \frac{b^ax}{a \ln{b}}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \ln{x} dx = x \ln{x} - x \end{equation*}\] |

| \[\begin{equation*}\int_{}{} b^{u} du = \frac{b^u}{\ln{u}}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} x \mathrm{e}^{ax} dx = \frac{\mathrm{e}^ax}{a^2} \left( ax - 1 \right)\end{equation*}\] |

| \[\begin{equation*}\int_{}{} x b^{ax} dx = \frac{x b^{ax}}{a \ln{b} } - \frac{b^{ax}}{a^2 \left( \ln{b} \right) }\end{equation*}\] |

| \[\begin{equation*}\int_{}{} x^2 \mathrm{e}^{ax} dx = \frac{\mathrm{e}^{ax}}{a^3} \left(a^2 x^2 - 2ax + 2 \right)\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \ln{ax} dx = x \ln{ax} - x \end{equation*}\] |

| \[\begin{equation*}\int_{}{} x \ln{ax} dx = \frac{x^2}{2} \ln{ax} - \frac{x^2}{4}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} x^2 \ln{ax} dx = \frac{x^3}{3} \ln{ax} - \frac{x^3}{9}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \left( \ln{ax} \right)^2 dx = x \left( \ln{ax} \right)^2 - 2x \ln{ax} + 2x \end{equation*}\] |

| \[\begin{equation*}\int_{}{} \frac{dx}{x \ln{ax}} = \ln{\left( \ln{ax} \right)}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \frac{x^n}{\ln{ax}} dx = \frac{1}{a^{n+1}} \int_{}{} \frac{\mathrm{e}^{y} dy}{y} \text{, where } y = \left(n + 1\right) \ln{ax}\end{equation*}\] |

| Expressions Containing Trigonometric Functions |

| \[\begin{equation*}\int_{}{} \sin{x} dx = -\cos{x}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \cos{x} dx = \sin{x}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \tan{x} dx = -\ln{ \left( \cos{x} \right)}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \cot{x} dx = \ln{\left( \sin{x} \right)}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \sec{x} dx = \ln{ \left( \sec{x} + \tan{x} \right)}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \csc{x} dx = \ln{ \left( \csc{x} - \cot{x} \right)}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \sin^2{u} du = \frac{1}{2} u - \frac{1}{2} \sin{u} \cos{u}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \cos^2{u} du = \frac{1}{2} u + \frac{1}{2} \sin{u} \cos{u}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \csc^2{u} du = -\cot{u}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \tan^2{u} du = \tan{u} - u \end{equation*}\] |

| \[\begin{equation*}\int_{}{} \cot^2{u} du = -\cot{u} - u \end{equation*}\] |

| \[\begin{equation*}\int_{}{} \sin{ax} dx = -\frac{1}{a} \cos{ax}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \sin^2{ax} dx = \frac{x}{2}-\frac{\sin{2ax}}{4a} \end{equation*}\] |

| \[\begin{equation*}\int_{}{} \frac{dx}{\sin{ax}} = \frac{1}{a} \ln{ \tan{\frac{ax}{2}}}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \frac{dx}{\sin^2{ax}} = -\frac{1}{a} \cot{ax}\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \frac{dx}{1 \pm \sin{ax}} = \mp \frac{1}{a} \tan{\left( \frac{\pi}{4} \mp \frac{ax}{2} \right) }\end{equation*}\] |

| \[\begin{equation*}\int_{}{} \sin{x} \cos{x} dx = \frac{1}{2} \sin^2{x}\end{equation*}\] |

2.10 Laplace Table

(Moyer and Ayres 2013; Eshbach 1936)

| Time Domain \(f(t) = \mathcal{L}^{-1} \left\{ F \left( s \right) \right\}\) | Frequency Domain \(F\left( s \right) = \mathcal{L} \left\{ f \left( t \right) \right\}\) |

|---|---|

| \[\begin{equation*}1 \text{ (step function)}\end{equation*}\] | \[\begin{equation*}\frac{1}{s} \text{, where } \left(s > 0\right)\end{equation*}\] |

| \[\begin{equation*}t\end{equation*}\] | \[\begin{equation*}\frac{1}{s^2} \text{, where } \left(s > 0\right)\end{equation*}\] |

| \[\begin{equation*}t^{n-1}\end{equation*}\] | \[\begin{equation*}\frac{\left( n-1 \right)!}{s^n} \text{, where } \left(s > 0\right)\end{equation*}\] |

| \[\begin{equation*}\sqrt{t}\end{equation*}\] | \[\begin{equation*}\frac{{\sqrt \pi }}{{2{s^{\frac{3}{2}}}}} \text{, where } \left(s > 0\right)\end{equation*}\] |

| \[\begin{equation*}\frac{1}{\sqrt{t}}\end{equation*}\] | \[\begin{equation*}\frac{{\sqrt \pi }}{{{s^{\frac{1}{2}}}}} \text{, where } \left(s > 0\right)\end{equation*}\] |

| \[\begin{equation*}{t^{n - \frac{1}{2}}},\,\,\,\,\,n = 1,2,3, \ldots\end{equation*}\] | \[\begin{equation*}\frac{{1 \cdot 3 \cdot 5 \cdots \left( {2n - 1} \right)\sqrt \pi }}{{{2^n}{s^{n + \frac{1}{2}}}}} {, where } \left(s > 0\right)\end{equation*}\] |

| \[\begin{equation*}\mathrm{e}^{at}\end{equation*}\] | \[\begin{equation*}\frac{1}{s-a} \text{, where } \left(s > a \right)\end{equation*}\] |

| \[\begin{equation*}t\mathrm{e}^{at}\end{equation*}\] | \[\begin{equation*}\frac{1}{{\left( {s - a} \right)}^{2}} \text{, where } \left(s > a \right)\end{equation*}\] |

| \[\begin{equation*}{t^n}{{e}^{at}},\,\,\,\,\,n = 1,2,3, \ldots\end{equation*}\] | \[\begin{equation*}\frac{{n!}}{{{{\left( {s - a} \right)}^{n + 1}}}} \text{, where } \left(s > a \right)\end{equation*}\] |

| \[\begin{equation*}\sin{at}\end{equation*}\] | \[\begin{equation*}\frac{a}{s^2 + a^2} \text{, where } \left(s > 0 \right)\end{equation*}\] |

| \[\begin{equation*}\cos{at}\end{equation*}\] | \[\begin{equation*}\frac{s}{s^2 + a^2} \text{, where } \left(s > 0 \right)\end{equation*}\] |

| \[\begin{equation*}\mathrm{e}^{bt} \sin{at}\end{equation*}\] | \[\begin{equation*}\frac{a}{\left(s - b \right)^2 + a^2} \text{, where } \left(s > b \right)\end{equation*}\] |

| \[\begin{equation*}\mathrm{e}^{bt} \cos{at}\end{equation*}\] | \[\begin{equation*}\frac{s-b}{\left(s - b \right)^2 + a^2} \text{, where } \left(s > b \right)\end{equation*}\] |

| \[\begin{equation*}t \sin{at}\end{equation*}\] | \[\begin{equation*}\frac{2as}{\left(s^2 - a^2\right)^2} \text{, where } \left(s > a \right)\end{equation*}\] |

| \[\begin{equation*}t \cos{at}\end{equation*}\] | \[\begin{equation*}\frac{s^2 - a^2}{\left(s^2 + a^2\right)^2} \text{, where } \left(s > 0 \right)\end{equation*}\] |

| \[\begin{equation*}\sinh{at}\end{equation*}\] | \[\begin{equation*}\frac{a}{\left(s^2 - a^2\right)^2} \text{, where } \left(s > \lvert a \rvert \right)\end{equation*}\] |

| \[\begin{equation*}\cosh{at}\end{equation*}\] | \[\begin{equation*}\frac{s}{\left(s^2 - a^2\right)^2} \text{, where } \left(s > \lvert a \rvert \right)\end{equation*}\] |

| \[\begin{equation*}\sin \left(at + b\right)\end{equation*}\] | \[\begin{equation*}\frac{s\sin{b} + a \cos{b}}{\left(s^2 + a^2\right)^2}\end{equation*}\] |

| \[\begin{equation*}\cos \left(at + b\right)\end{equation*}\] | \[\begin{equation*}\frac{s\cos{b} - a \sin{b}}{\left(s^2 + a^2\right)^2}\end{equation*}\] |

| \[\begin{equation*}\frac{\mathrm{e}^{at} - \mathrm{e}^{bt}}{a - b}\end{equation*}\] | \[\begin{equation*}\frac{1}{\left(s-a\right)\left(s-b\right)}\end{equation*}\] |

| \[\begin{equation*}\frac{a\mathrm{e}^{at} - b\mathrm{e}^{bt}}{a - b}\end{equation*}\] | \[\begin{equation*}\frac{s}{\left(s-a\right)\left(s-b\right)}\end{equation*}\] |

| \[\begin{equation*}\delta \text{ (impulse function)}\end{equation*}\] | \[\begin{equation*}1\end{equation*}\] |

| \[\begin{equation*}\text{square wave, period }=2c\end{equation*}\] | \[\begin{equation*}\frac{1}{s \left( 1 + \mathrm{e}^{-cs} \right)}\end{equation*}\] |

| \[\begin{equation*}\text{triangular wave, period }=2c\end{equation*}\] | \[\begin{equation*}\frac{1 - \mathrm{e}^{-cs}}{s^2 \left( 1 + \mathrm{e}^{-cs} \right)}\end{equation*}\] |

| \[\begin{align} at \text{ for } 0 \leq t < c \\ \text{sawtooth wave, period } = c \end{align}\] | \[\begin{equation*}\frac{a \left( 1 + cs - \mathrm{e}^{-cs} \right) }{s^2 \left( 1 - \mathrm{e}^{-cs} \right) }\end{equation*}\] |

| \[\begin{equation*}\sin{at} \sin{bt}\end{equation*}\] | \[\begin{equation*}\frac{2abs}{\left( s^2 + \left(a+b\right)^2\right) \left( s^2 + \left(a-b\right)^2\right)}\end{equation*}\] |

| \[\begin{equation*}\frac{1 - \cos{at}}{a^2} \end{equation*}\] | \[\begin{equation*}\frac{1}{s \left( s^2 + a^2 \right) }\end{equation*}\] |

| \[\begin{equation*}\frac{at - \sin{at}}{a^3} \end{equation*}\] | \[\begin{equation*}\frac{1}{s^2 \left( s^2 + a^2 \right) }\end{equation*}\] |

| \[\begin{equation*}\frac{\sin{at} - at\cos{at}}{2a^3} \end{equation*}\] | \[\begin{equation*}\frac{1}{\left( s^2 + a^2 \right)^2 }\end{equation*}\] |

If M, N,b are positive↩︎